By

AgilePoint

June 7, 2025

•

4

min read

The promise of agentic AI is big — faster reactions, smarter systems, and fewer people stuck doing repeat work. But what are AI hallucinations? These are when AI writing sounds smooth enough to pass as a quick Google search. The problem is that the story is wrong in small but important ways, and those gaps can ripple through anything that depends on it.

AI hallucinations are answers that sound right but aren't. Sometimes AI makes things up. It’s not trying to trick anybody, it’s just trying to fill the gaps when it doesn’t have what it needs. Sometimes that shows up as a wrong number, sometimes as a confident explanation that never actually happened.

These slip-ups happen because most models are trained on open data, not the kind of private, detailed information that runs a business. They don’t understand your process names, your workflows, or how one department’s choices affect another.

When the model hits a blank, it doesn’t stop — it fills the space so the story keeps moving. That might slide in a chatbot, but in a business it can cause real messes.

In simpler terms, it’s like giving an intern half the files and expecting a perfect report. Without the full story, the system fills gaps the only way it knows how — by guessing. When that guess runs across multiple data silos, the errors multiply faster than most teams can catch them.

One of the key reasons for the hallucination challenge is that most off-the-shelf LLM/GenAI models were not trained on enterprise data. On their own, these models don’t understand your business context, your processes, or the way decisions really get made inside your organization.

Another issue sits underneath all of this. The data inside most organizations isn’t organized in a way AI can actually use. It’s split across platforms and business units, so agents are guessing around the gaps instead of working from a single, trusted view.

Hallucinations show up in ways that don’t always look obvious. A model might summarize a report, quote a number, or write an email, all with the same confidence as if it had verified every word...but it hadn't.

Think about a finance team that loves its banking automation software. On the surface everything balances, yet key transactions are missing in the background. Or, in another case, a chatbot writes customer updates that sounded perfect but referenced rules that didn’t exist? Those small slips would get amplified when people trust the results without checking.

It happens in manufacturing process automation as well. A scheduling tool might combine two entries and create a phantom order that throws off an entire production run. When data moves between generative AI systems without the right checks, the results can look solid but rest on gaps nobody noticed.

Imagine an AI agent grabbing customer data from one system and checking it against another that has not been updated in months. At first the response looks solid. Then someone spots a client record or price that is off, and you realize people have been making decisions on bad numbers.

That’s the nature of large language models (LLMs). They pull from huge amounts of internet data, much of it uneven or outdated. The system learns to predict the next word, not whether it’s the correct answer. It’s confident, not careful.

The way to keep hallucinations in check is to ground the model in solid information. It needs context and accurate data it can trust. In enterprise use, that means connecting systems so the model sees the full picture instead of fragments.

It all starts with clean training data. When there's insufficient training data or it's factually incorrect, the system starts guessing. Even a small gap can throw off the numbers and send a project in the wrong direction.

To keep things on track, teams use a mix of guardrails, hallucination mitigation techniques, and verification tools. Structured prompts, retrieval augmented generation, and explainable AI all help models check their own work before it reaches anyone else. Each method adds a layer of safety, surfacing low confidence scores before decisions get made on shaky ground.

Adding human oversight helps, too. Even the best generative AI models can miss subtle context, so pairing automation with human review keeps generative AI tools and AI-generated content accurate, rather than producing misleading outputs. The mix of expertise and transparency makes all the difference — it turns guessing into understanding.

A lot of early agent projects plug AI agents straight into core systems through APIs or MCP, without a layer that explains how the business actually works. On paper it looks powerful. In practice, you now have an agent hitting live systems with only a partial view of the data and almost no sense of how people really use it.

When the agent cannot see across departments or systems, it starts filling in the blanks. It treats one system as the truth, even when another system tells a different story. The output still looks polished, but pieces of it are guessed or out of context. That is how “hallucinations” show up in real life, as wrong prices, bad routing, or approvals that never should have gone through.

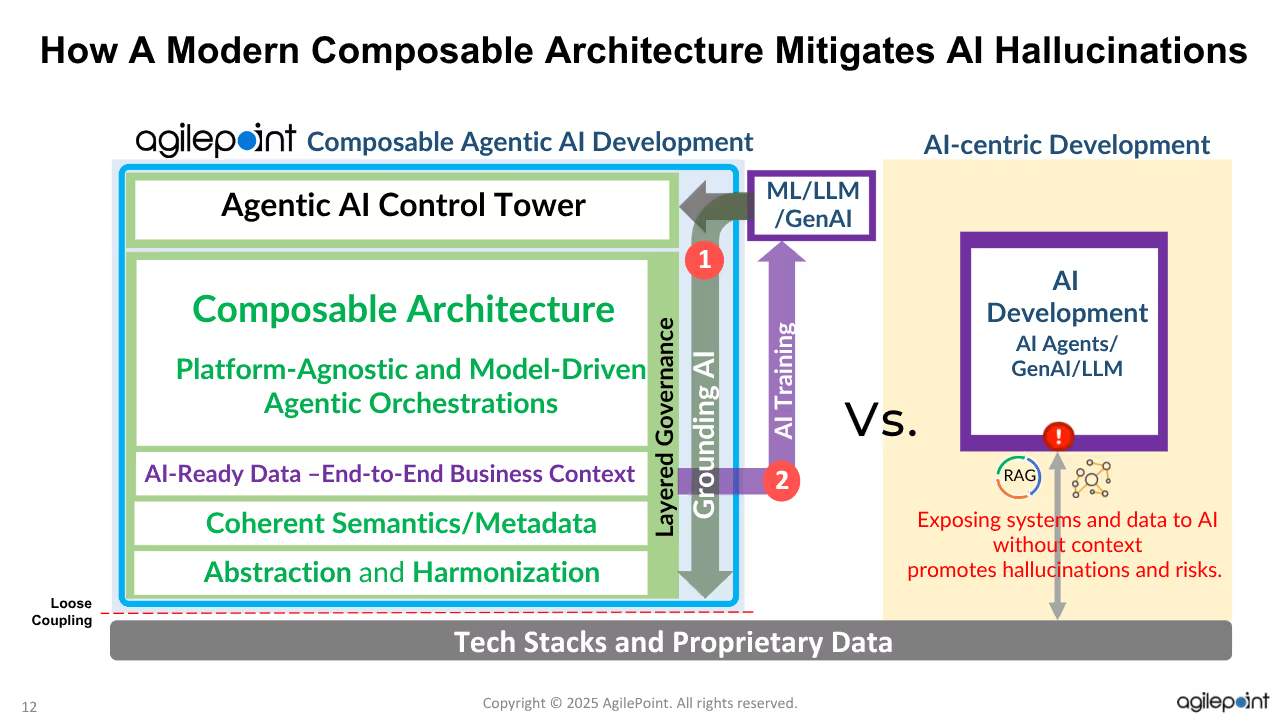

The AgilePoint no-code, agentic-ready, composable platform eliminates hallucinations to provide safe, enterprise-grade, end-to-end agentic orchestrations through two critical components:

A layered governance framework helps create guardrails for filtering and grounding non-deterministic AI hallucinations. You set the rules first things like regulations, security rules, and who is allowed to see or change what. Every agent call has to go through that layer, so if something breaks those rules, it simply does not go through.

That same layer stops agents from approving the wrong deals, touching data they should not see, or pushing changes that break company policy, even if the underlying model suggests otherwise.

End-to-end abstraction and harmonization enable coherent semantics based on unified metadata across 120+ systems. This comprehensive approach facilitates the capture of business context as AI-ready data specifically designed for training AI agents.

Skip the one-size-fits-all models. Here, AI agents are tuned to your processes, guardrails, and priorities, so they reflect how your organization really works day to day.

This point is where the next generation of abstracted, composable architecture can serve as an adaptive, no-code, platform-agnostic orchestration layer. This architectural approach addresses the hallucination challenge through three critical capabilities:

Most companies still have sales in one system, support in another, and billing in something older that nobody wants to touch. Instead of sending agents into each system and hoping they guess how things connect, you line the data up once in a shared catalog.

You pick a single system of record for each customer or order and give it a clean ID. AI agents pull from that source, so they are not juggling conflicting entries.

The platform natively generates AI-ready data by capturing the end-to-end business context with coherent semantics across platforms. So the agents learn from the real flow of work including the steps people follow, the approvals they give, and how systems pass data around, not from disconnected rows in a table.

The architecture enables real-time, multi-vendor, and multi-agent goal-driven optimization without requiring code generation or changes to existing code. This approach eliminates the risk of AI-generated code introducing unpredictable behaviors while maintaining the agility needed for continuous improvement.

The transition from experimental AI implementations to production-ready agentic systems requires more than just advanced AI models, it demands a fundamental rethinking of how AI agents access, understand, and act upon enterprise data.

By eliminating hallucinations through proper abstraction, harmonization, and governance, organizations can build the trust necessary to deploy agentic systems that truly transform business operations.

The choice is clear: continue with the current approach and risk joining the 42% of organizations abandoning their AI initiatives, or adopt the architectural foundation necessary for safe, reliable, and transformative agentic AI implementation.

The future of enterprise automation depends on getting this foundation right. With the proper approach to eliminating hallucinations, real-time agentic systems can finally deliver on their transformative promise. Contact us to build that foundation.

Not completely. Hallucinations are baked into how large language models work, because they are always predicting what should come next rather than checking against a master list of facts. The good news is that you can make them far less common by pairing models with clean data, tight scopes, and review steps.

Under the hood, these models learn patterns from huge amounts of text. When the training data has gaps or odd examples, the model still has to produce an answer, so it guesses based on what feels similar. It doesn’t realize it’s wrong — it’s just trying to finish the thought. The AI agent is simply completing a sentence, it doesn't know that the facts behind that sentence are shaky.

ChatGPT learns from large collections of public text. It then tries to predict the next word in a way that sounds natural. ChatGPT does not browse live systems or double check every statement against a database. That is why you can get answers that read smoothly but miss a date, mix up names, or invent a source. The style feels right, even when the details are off.

There’s no exact rate. On some days you hardly notice them, on others you catch a few in a row. Open, chatty questions usually produce more made up detail than narrow tasks tied to real data. The only honest way to know in your own company is to run trials, mark the misses, and keep score over time.

Start by assuming the model will be wrong sometimes, then design around that fact. Use it on well framed tasks, ground it in trusted data, and add checks where answers really matter. That can include retrieval from internal systems, guardrails on what the agent is allowed to do, and human review for high risk actions.

Real progress comes from teams that question results, adjust fast, and stay transparent about how AI decisions are made. The more people stay part of the loop, the smarter and more dependable these systems become.

Ready to take the next step? Let’s talk about how your team can build AI that works the way your business does. Contact us to start your roadmap.